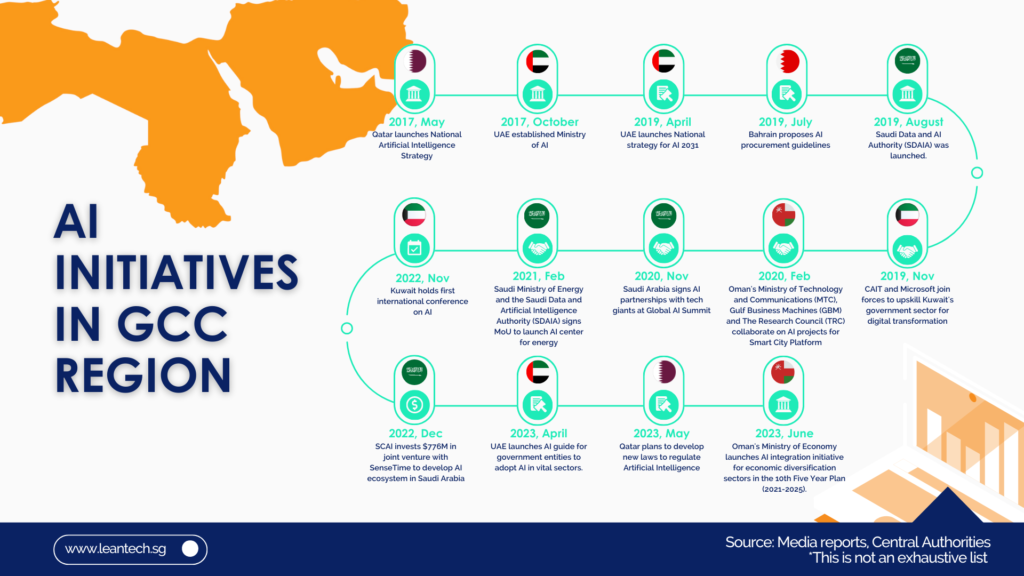

The utilisation of AI to improve efficiency and drive business performance has experienced a substantial increase across various sectors in the last five years. While AI was previously associated mainly with futuristic robots and autonomous vehicles, its applications have evolved to encompass more nuanced yet equally powerful uses that enhance operational effectiveness.

In the banking industry, AI is now primarily focused on the following:

- Offering automated services to customers through ‘Chat bots’, voice banking, and robo-advice.

- Conducting screenings of potential customers for credit and other financial product applications, including ‘know your customer’ checks.

- Identifying new financial products to promote to existing customers.

- Detecting suspicious transactions to monitor risks, report, and ensure compliance with anti-money laundering and financial crime regulations. Various global institutions are developing algorithms to assess past transactions to predict unusual or suspicious activities in the future.

- Screening potential job candidates to assess their suitability during the interview process.

Here are some big banks using AI:

While implementing AI in these areas brings opportunities for increased efficiency, revenue, better risk management, and compliance, it also presents crucial ethical and security risks that banks must effectively manage if they choose to adopt AI solutions.

The use of AI in the banking sector presents significant ethical and security risks that need to be properly managed. These risks include:

Privacy Concerns

AI applications often rely on customer data held by banks, raising the risk of potential privacy law breaches. Banks should be transparent with customers about data usage for AI purposes, obtain explicit consent, and avoid relying on implied consent, considering the strengthened privacy standards and consumer laws worldwide.

Effectiveness of Automated Services

The implementation of AI-powered automated services, such as chatbots and voice systems, must be seamless and effective. If these systems are rudimentary and fail to address customer queries adequately, it can lead to frustration and alienation among customers, resulting in reputational damage and decreased revenue for the bank.

Unconscious Bias

AI used for customer and employee screening may perpetuate existing biases present in the algorithms. This could hinder a bank’s ability to achieve gender and diversity targets and limit outreach to customers from lower socioeconomic backgrounds, thus impacting community outcomes. The use of diverse data sources and backgrounds for algorithm creators can help reduce biases.

Regulatory Compliance

The AI decision-making model used for customer and employee decisions must comply with evolving legal and regulatory requirements. This includes ensuring that credit decisions are based on factual income and capacity to pay, rather than projected propensities divorced from individual circumstances. Human oversight is essential for making final decisions, like credit approvals.

Cybersecurity Vulnerabilities

Banks must implement robust security controls to mitigate the risk of cyber attacks. Unauthorised access to employee and customer data can lead to regulatory privacy breaches, substantial penalties, and private class actions. Complying with cybersecurity laws and investing in expertise and innovative technology is crucial to stay ahead of cyber threats and ensure a cyber-focused risk management approach throughout the organisation.

So what’s right?

AI offers significant opportunities for enhancing efficiency and meeting regulatory requirements. Although AI usage brings notable ethical and security risks, proactive boards and management can effectively address these challenges. Banks must remain vigilant as AI adoption continues to grow rapidly. Governments are also taking steps to develop AI-specific regulations and establish dedicated AI regulators, emphasising the need to manage ethical and security concerns for governance and liability reasons. Banks may develop their own self-regulatory AI standards, demonstrating trust, accountability, and responsibility to the public as they continue to adapt and evolve with AI technology.

Want to dive deeper and know how you can use AI ethically in your organisation? Check out our courses on AI to stay ahead of the curve!